Speed/Load Test Update

Dear Community,

Dear Community,

Here are further updates regarding the speed/load test.

What we have finished.

Parallelism is now fully implemented and tested.

You can find a description of what parallelism means via the link below:

https://en.wikipedia.org/wiki/Parallel_computing

What we are doing now.

We are examining the transport code part, as we believe this is a hidden reserve for shortening the round duration, and the transfer of data, using parallelism.

We are also optimizing the monitor; the transactions are being generated and displayed as they should, however, we are also optimizing the rest of the monitor code to make sure that remains in synchronization with the node.

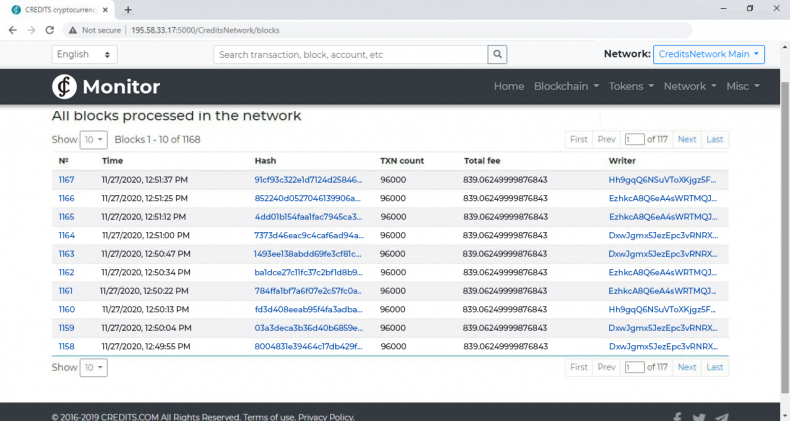

Here is a screenshot with the latest test results:

With a large number of transactions, the speed of signature verification becomes a smaller part of the overall node speed, and we are finding the main bottlenecks are to do with disk i/o, memory, and synchronization. We are currently looking at ways to optimize these to deliver an outstanding speed/load test.

What do we plan next?

Additional optimization of other aspects of the node (disk, memory, etc) is required since we have optimized the parallelism as much as we can concerning the computational load.

We will then connect other host servers, getting ready for the speed/load test.

Problems/Issues Found.

We have found that the monitor begins to lag at speeds greater than 50k TPS. As an example, if we run 50k TPS for 40 minutes, then this lag time blows out to around 10 minutes. It needs to be studied and resolved.

We have also identified an issue with multiple receipts of transaction packets, which occurs during the propagation of transactions between nodes. So far, no negative effect has been observed from this. However, when the information transfer rates approach 20% of the network capabilities, perhaps we will need to revisit this.

We have discovered a problem with the stable operation of a node when synchronizing large blocks. This problem has manifested itself several times, and we are currently analyzing the log data so that it can be solved.

Be with us and until next time!